To answer my last entry, I've added metropolis sampler to my photon tracing routine.

Well it was a natural thing to do.

I am aware of that paper by Toshiya et al. - Robust Adaptive Photon Tracing using Photon Path Visibility.

But since I am quite familiar with Kelemen's mlt, and I didn't want to switch to that sppm approach (I keep it as a traditional photon mapper with shrinking radius), I implemented it quite differently. Path quality is equal to how many photons do contribute to photon map (and photon map's bouding box is based on the visible hitpoints). So it just favors longer paths. Perhaps I could extend it to how much flux the path carries.

Also like in standard Kelemen's mlt, there are 2 mutation strategies, small and large mutation, which the former needs some further testing like mutation approach and size.

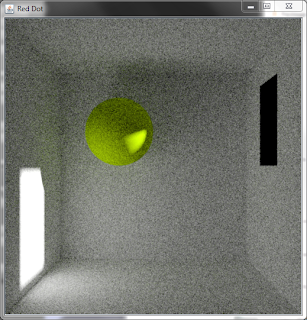

So enough talking, pics:

Path tracing:

All images were computed in 2 minutes, also the metropolis code is still fresh and messy.