I'm really sorry for not posting.

I, pretty much, wasn't doing anything ray tracing related :(.

For starters, I would like to give you a little update:

I'm writting my master's thesis this year and it's about raytracing.

However I'm working commercially, for quite some time now, in the Java EE / Spring field, and shamefully enough, I feel like going that direction.

I'm no longer the guy that had entire weeks to "waste" just to render that caustic reflections, in the cornell box, a little bit faster.

So that being said, writting my master's could be my last ray tracing encounter.

I know how it sounds, but to me, this thing is like, too much absorbing for a hobby, you know?

And how are you guys with your ray tracing projects? How is life?

Sincerely

Friday, May 25, 2012

Friday, July 1, 2011

Progressive photon mapping with metropolis sampling

Hi again,

To answer my last entry, I've added metropolis sampler to my photon tracing routine.

Well it was a natural thing to do.

I am aware of that paper by Toshiya et al. - Robust Adaptive Photon Tracing using Photon Path Visibility.

But since I am quite familiar with Kelemen's mlt, and I didn't want to switch to that sppm approach (I keep it as a traditional photon mapper with shrinking radius), I implemented it quite differently. Path quality is equal to how many photons do contribute to photon map (and photon map's bouding box is based on the visible hitpoints). So it just favors longer paths. Perhaps I could extend it to how much flux the path carries.

Also like in standard Kelemen's mlt, there are 2 mutation strategies, small and large mutation, which the former needs some further testing like mutation approach and size.

So enough talking, pics:

Path tracing:

All images were computed in 2 minutes, also the metropolis code is still fresh and messy.

Monday, June 27, 2011

photon mapping vs path tracing outdoor test

So yea photon mapping doesn't handle biger scenes very well.

Glass is farily good (good caustics makes up for crap direct ilumination, I trace 5m photons every pass here, perhaps if I lowered that number I would get better direct lighting and antialiasing, but worse indirect lighting).

Photon mapping:

As expected path tracing performs better, maybe some fine tunning (photons per pass and initial radius) would help, but I don't expect miracles.

Sunday, June 26, 2011

Download Red Dot demo

Hi there,

I would like to provide runnable version of the current incarnation of my raytracer.

I know it's nothing special, but maybe someone would like to run it, just for fun, I know I would.

Unpack the zip archive and there you have it - double click on reddot.jar or type:

java -jar reddot.jar -Xms1g -Xmx1g

(if you care about the performance it is acutally a lot, lot better if you specify those options from command line, because if not, jvm will get too little memory for photons, and GC will trigger like crazy)

It reads obj files (with its material representation) straight from blender exporter.

But since camera is fixed I didn't provide a gui for importing your own scenes yet.

Image is updated every each 5 seconds.

Moreover, rendering fashion depends on 3 parameters:

- initial radius = (world_max - world_min).length / 50

- photons_per_pass = 100000

- alpha = 0.5

Initial radius just like in regular photon mapping is a tradeoff between smoothness and details. If the initial radius was smaller, the caustic would be sharper from the start, but image would be noiser overall.

Instead of basing it on the world bounding box, I perhaps should base it on bounding box of the photon map. But in this case it's exactly the same.

Photons per pass means how many photons it shoots between ray tracing passes. It doesn't store photons for the first hit, because direct ligting is computed like in regular path tracer.

This is also kind of a tradeoff. In scenes where there is no direct ligting (emitter is not directly visible by the diffuse surfaces) all direct lighting computations (visibility checks mostly) are a waste of time. But in the other case, when surfaces are lit directly, those direct lighting computations helps quite a lot.

And an alpha parameter controls how fast the initial radius shrinks.

Here I attach my 45m render (mobile i7):

Friday, June 24, 2011

Wednesday, June 22, 2011

Photon mapping ownz!

Hi there, it's been a while.

I've implemented progressive photon mapping, first exactly as it is in original paper (constructing hitmap once and shooting photons in the main loop), it was all nice and fast but quite troublesome, especially if it comes to antialiasing and glossy materials.

Then i've implemented the more basic approach to PPM as in this paper.

But I have some isues as for now:

I've implemented progressive photon mapping, first exactly as it is in original paper (constructing hitmap once and shooting photons in the main loop), it was all nice and fast but quite troublesome, especially if it comes to antialiasing and glossy materials.

Then i've implemented the more basic approach to PPM as in this paper.

But I have some isues as for now:

- I am not sure about the adjoint bsdf for shading normals. What's obvious is smooth normals didnt work out of the box for the lambertian. What I did is using original normal, and scaling contribution by |in*nor_s| / |in*nor_g|, kinda works but I am not sure. What with other materials? For specular and dielectric it seems to work with just smoothed normal in place of the geometric one.

- Since I'm still using Java I have problems with Garbage Collector, I build uniform grid for 100000 - 200000 photons every pass on all 8 threads separately and that really kills the GC, even if I try to reuse the grid. Any suggestions?

- Shading on hard corners, for example 90 degree corners between the walls. Right now I use simple hack to check for the angle between the photon and surface normal, but that makes them black, the blackness does shrink over time but doesn't seem to ever disappear. So I wonder if it wouldn't be a better choice to leave it alone converging to the right sollution in +inf time and 1/inf radius (?)

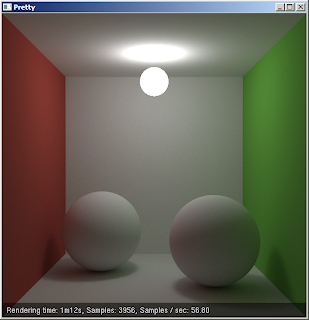

Other than that it's blazing fast. This image took around one hour to render:

But was already pretty good after minutes.

Monday, November 1, 2010

Couldn't resist the GPU hype,

so I wrote an open cl path tracer in C. It's very similar to Dade's SmallptGPU but it uses float4 though.

I don't have proper GPU to test it on, but I got tempted by sse operations that vector operations supposedly utilize.

A performance note:

While I get 2 fps on this scene with my core 2 duo (no ht), my friend with his radeon 4870 gets almost 60 fps.

I'm not yet sure if thats good enough.

(I misleadingly called frames samples, while they are samples per pixel, yea)

I don't have proper GPU to test it on, but I got tempted by sse operations that vector operations supposedly utilize.

A performance note:

While I get 2 fps on this scene with my core 2 duo (no ht), my friend with his radeon 4870 gets almost 60 fps.

I'm not yet sure if thats good enough.

(I misleadingly called frames samples, while they are samples per pixel, yea)

Subscribe to:

Posts (Atom)